A Comprehensive FiguraSVC Guide for Speech Visualization

Preemptive Rambles

FiguraSVC has one primary goal: to enable real-time audio visualization within Figura using Simple Voice Chat. While designed to be accessible, implementing it requires some familiarity with Figura’s API and potentially a touch of linear algebra for advanced mouth movements.

Prerequisites

- An existing Figura avatar is required. This guide does not cover avatar creation; there are plenty of very helpful resources for that.

- A basic understanding of Lua and the Figura API (at least for the first section)

- (Optional) Familiarity with 3D animation concepts (like rigging and rotation) for the more advanced method.

There are a few methods of adding mouth movement:

- The Easy Way: Using pings to make the mouth move up and down.

- The Hard Way: Using FiguraSVC events and the

voiceAPI to make the mouth move up and down based on actual audio levels. - The Even Harder Way: Detecting phonemes from the audio data to drive mouth shapes. (Not covered here). This is extremely difficult and math-intensive. FiguraSVC may offer tools for this in the future, but currently, it requires manual implementation.

The Easy Way

This system is remarkably simple. All you’re really doing is turning a mouth movement animation on and off based on whether your microphone is active.

Mouth Rigging

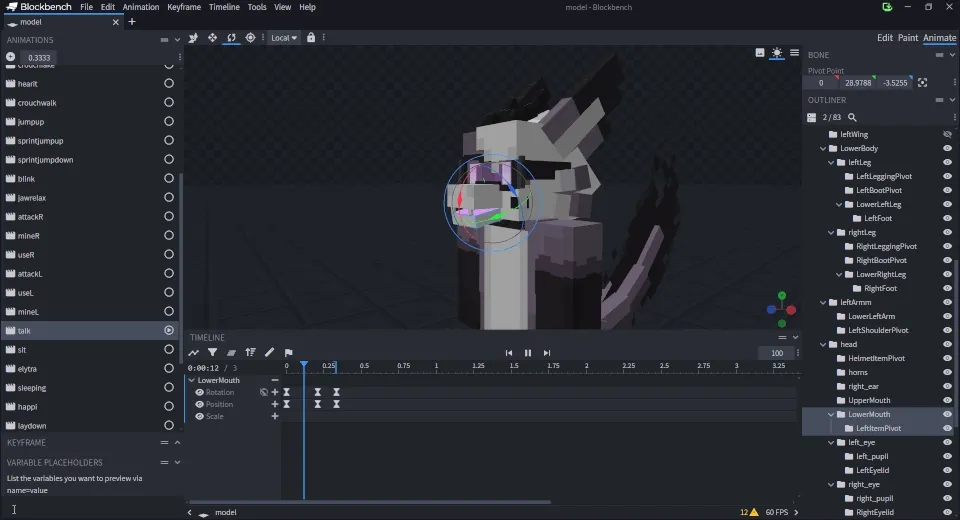

You just need to create an animation in Blockbench that represents talking. In this example, the animation is named talk.

Your talking animation should look something like this:

Programming the Mouth Movement

Get ready for the most difficult challenge ever (just kidding, this is super easy).

In versions of FiguraSVC prior to version 2.1, you would’ve had to write much more boilerplate code. But now, thanks to technology, you only need to add a couple of lines to your avatar’s script.

-- This ping function allows compatibility with clients *without* FiguraSVC installed.-- It receives the state which is a boolean value (true for talking, false for not talking).function pings.on_talk(state) animations.model.talk:setPlaying(state)end

-- Only run the FiguraSVC-specific code if the mod is loaded.if client:isModLoaded("figurasvc") then -- This runs only on the *host client* (the user whose avatar it is) and sends the ping. voice:pingOnActive(pings.on_talk)endClick to see code snippets for older versions!

FiguraSVC 2.0

local microphoneOffTime = 0local isMicrophoneOn = false

function pings.talking(state) -- toggle animation here animations.model.talk:setPlaying(state)end

function events.tick() local previousMicState = isMicrophoneOn microphoneOffTime = microphoneOffTime + 1 isMicrophoneOn = microphoneOffTime <= 2

if previousMicState ~= isMicrophoneOn then pings.talking(isMicrophoneOn) endend

if client:isModLoaded("figurasvc") and host:isHost() then function events.host_microphone() microphoneOffTime = 0 endendFiguraSVC 1.1 and 1.0

local microphoneOffTime = 0local isMicrophoneOn = false

function pings.talking(state) -- toggle animation here animations.model.talk:setPlaying(state)end

function events.tick() local previousMicState = isMicrophoneOn microphoneOffTime = microphoneOffTime + 1 isMicrophoneOn = microphoneOffTime <= 2

if previousMicState ~= isMicrophoneOn then pings.talking(isMicrophoneOn) endend

if client:isModLoaded("figurasvc") and host:isHost() then

events["svc.microphone"] = function() microphoneOffTime = 0 endendThe pingOnActive approach shown above is technically all you need. It sends a standard Figura ping, so anyone with Figura (even without FiguraSVC) can see your avatar talk.

That’s it for the easy method! With just a few lines, you have basic mouth movement synchronized with your voice activity.

While this method is super simple, this approach is purely binary – the mouth is either moving or it isn’t, regardless of how loudly you speak.

If you desire more dynamic and expressive mouth movements based on audio levels, you’ll need to dive into…

The Hard Way

See Final Result

While FiguraSVC seems (and is) very simple when doing basic mouth movement, the mod itself has a lot of low-level features that can be used for actually compelling mouth movement.

This approach moves beyond simple animation toggling. We’ll use the intensity (level) of your voice, derived from raw audio data, to drive the mouth’s movement directly in Lua. This is more complex but allows for much more nuance.

A Lesson in Audio Processing

(Feel free to skim this if you prefer to jump to the implementation)

FiguraSVC provides access to the raw audio stream captured from Simple Voice Chat. This data comes in a specific format known as Pulse-Code Modulation, or PCM. Essentially, PCM represents the sound wave of your voice as a series of snapshots, capturing the sound wave’s amplitude (loudness) at many points in time.

Simple Voice Chat sends these samples in packets, synchronized with Minecraft’s game ticks (20 times per second). Each packet typically contains 960 16-bit samples. Directly using these raw values to drive animation would result in extremely jittery movement.

We need to process this raw data and translate it into the smoother, more coherent motion we perceive as talking. While our end result might not be perfectly accurate (we are only measuring audio levels here), it should be good enough for most use cases.

Model Rigging

Successfully implementing this method hinges (pun not intended) on how your avatar’s mouth is rigged:

- Instead of relying on a pre-made animation in Blockbench, you’ll be directly manipulating the relevant model parts in Lua (e.g,

LowerJaw,UpperMouth, etc.). - You must identify the exact names of the model part(s) you want to animate in Figura (e.g.,

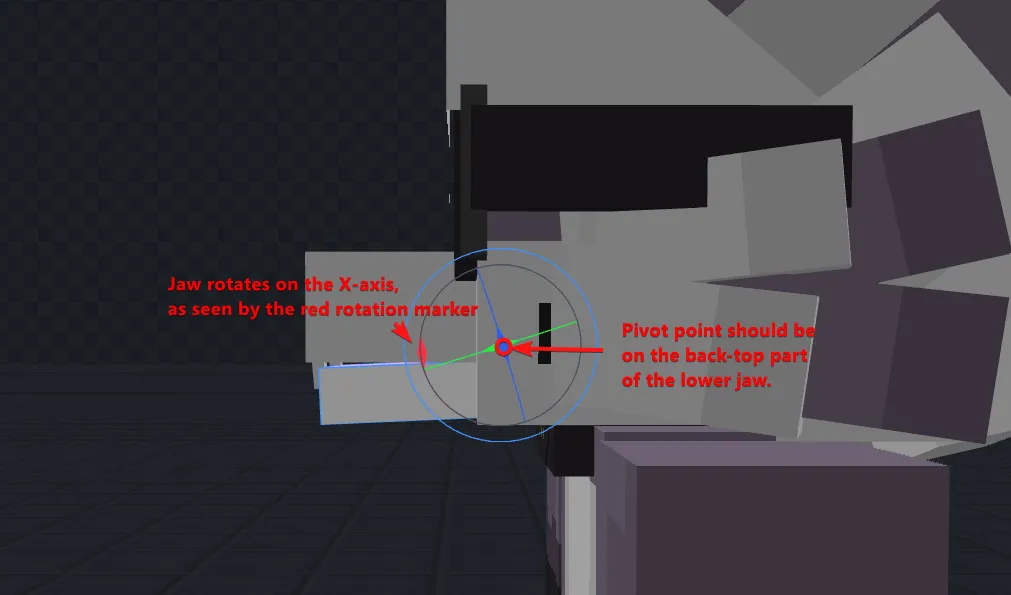

model.root.head.LowerJaw). - Knowing which axis (X, Y, or Z) your mouth part should rotate on is crucial. You can easily verify this by selecting the part in Blockbench and observing its rotation handles.

- It is good practice to have a “clamping point”. Establish a resting position/angle and a maximum open position/angle for the mouth part(s), we will use these later to prevent unnatural movement. Note these values down.

The exact parts and axes depend on your avatar’s design. My character has a pretty big snout, so I have it set up as the following:

For my avatar, the lower jaw’s resting angle is -17.5° (X-axis), and the maximum open angle is -45°.

Understanding FiguraSVC’s Events

We’ll primarily use two events provided by FiguraSVC:

events.host_microphone(event): Called only on your client (the host) when your microphone transmits audio. Contains the raw audio data. Cannot modify the audio sent to others (except for server OPs).events.microphone(event): Called on all other clients listening to you when they receive your audio packet. Contains the raw audio data. This is where you could potentially apply client-side audio modifications (like voice changers, if enabled).

Having split events prevents malicious users from easily injecting modified, potentially harmful audio directly into the stream for others to hear via host_microphone. Having all voice modifications on the microphone makes them very easy for others to disable.

For our use-case, we will bind both of these events to the same function so that you can see your own avatars mouth move.

Implementing the Mouth Movement

See The Completed Code

local modelRoot = models.model.rootlocal ORIGINAL_MOUTH_ROTATION = -17.5 -- The original rotation angle of the mouth partlocal MAX_MOUTH_ROTATION = -45 -- The maximum rotation angle of the mouth part

function pings.on_talk(state) if not client:isModLoaded("figurasvc") then animations.model.talk:setPlaying(state) endend

local function onMicrophoneOff(state) if state then return end modelRoot.body.head.LowerMouth:setRot(ORIGINAL_MOUTH_ROTATION, 0, 0)end

local function onMicrophone(event) local rawAudioLevel = voice:getLevel(event) -- Anything above 1.5 might be too intense, but adjust as needed. local smoothedAudioLevel = voice:smoothing(rawAudioLevel, 1.1) local clampedAudioLevel = math.clamp( ORIGINAL_MOUTH_ROTATION + -smoothedAudioLevel * 0.3, MAX_MOUTH_ROTATION, -- Lower bound (e.g., -45) ORIGINAL_MOUTH_ROTATION -- Upper bound (e.g., -17.5) ) modelRoot.body.head.LowerMouth:setRot(clampedAudioLevel, 0, 0) modelRoot.body.head.UpperMouth:setRot(-smoothedAudioLevel * 0.05, 0, 0)end

if client:isModLoaded("figurasvc") then events.host_microphone = onMicrophone events.microphone = onMicrophone voice:pingOnActive(pings.on_talk) voice:callOnActive(onMicrophoneOff)end-

First thing you should do is make a generalized function that will be called when the microphone is active. Make sure to wrap the events in a mod check.

-- This function will be called when the microphone is active.-- It will be called on the host client and all other clients.local function onMicrophone(event)-- **To be continued**endif client:isModLoaded("figurasvc") thenevents.host_microphone = onMicrophoneevents.microphone = onMicrophoneend

-

FiguraSVC provides a few utility functions to make it much easier to process our audio. We will be using

voice:getLevel(audio)voice:smoothing(level, smoothing).- This is simply a exponential moving average with a built in garbage collector, you can replace it with your own system if you want, but this method works fine for most use cases.

View Java source code for

voice:smoothingprivate final LoadingCache<UUID, Double> smoothingCache =CacheBuilder.newBuilder().expireAfterWrite(1, TimeUnit.SECONDS).build(CacheLoader.from(() -> 0D));@LuaWhitelist@LuaMethodDoc(overloads = @LuaMethodOverload(argumentTypes = {Double.class, Double.class},argumentNames = {"audioLevel", "sensitivity"}),value = "voice.smoothing")public double smoothing(Double audioLevel, Double sensitivity) {try {double smoothingCacheValue = smoothingCache.get(owner.owner);smoothingCache.put(owner.owner, sensitivity * audioLevel + (1 - sensitivity) * smoothingCacheValue);return smoothingCache.get(owner.owner);} catch (Exception e) {return 0;}}local function onMicrophone(event)local rawAudioLevel = voice:getLevel(event)-- Anything above 1.5 might be too intense, but adjust as needed.local smoothedAudioLevel = voice:smoothing(rawAudioLevel, 1.1)-- TODO: Apply level to rotationend4 collapsed linesif client:isModLoaded("figurasvc") thenevents.host_microphone = onMicrophoneevents.microphone = onMicrophoneend

-

This next part is different for everyone, but the general idea is that you use the smoothed audio level to rotate/scale your avatars mouth.

Make sure you save the original rotation angle of the mouth part (remember earlier!).

local modelRoot = models.model.rootlocal ORIGINAL_MOUTH_ROTATION = -17.5 -- The original rotation angle of the mouth partlocal MAX_MOUTH_ROTATION = -45 -- The maximum rotation angle of the mouth partlocal function onMicrophone(event)local rawAudioLevel = voice:getLevel(event)-- Anything above 1.5 might be too intense, but adjust as needed.local smoothedAudioLevel = voice:smoothing(rawAudioLevel, 1.1)local clampedAudioLevel = math.clamp(ORIGINAL_MOUTH_ROTATION + -smoothedAudioLevel * 0.3,MAX_MOUTH_ROTATION, -- Lower bound (e.g., -45)ORIGINAL_MOUTH_ROTATION -- Upper bound (e.g., -17.5))modelRoot.body.head.LowerMouth:setRot(clampedAudioLevel, 0, 0)modelRoot.body.head.UpperMouth:setRot(-smoothedAudioLevel * 0.05, 0, 0)end4 collapsed linesif client:isModLoaded("figurasvc") thenevents.host_microphone = onMicrophoneevents.microphone = onMicrophoneend- The first new addition was a bunch of variables at the top, these are all quite intuitive.

- With the

math.clampexpression, you might notice that the smoothed level is negated, this is because to open my avatars mouth, the rotation needs to be counter-clockwise (negative). If it were the opposite, my bottom jaw would clip through the upper mouth, and I wouldn’t call that good. If your mouth is the opposite, you can remove the negation and flip the max and original rotation values.

If you are attempting to use this exact code, you will absolutely have to fidget around with the numbers and the math, not every avatar is built the exact same way.

-

At this point, your script should work, but you might notice that your avatars mouth hangs open after you finish talking. This is because there is no code that resets the mouth to its original position when you stop talking. To fix this, we can use the

voice:callOnActivemethod to reset the mouth position when the microphone is turned off.local modelRoot = models.model.rootlocal ORIGINAL_MOUTH_ROTATION = -17.5 -- The original rotation angle of the mouth partlocal MAX_MOUTH_ROTATION = -45 -- The maximum rotation angle of the mouth partlocal function onMicrophoneOff(state)if state then return end -- Don't do anything if the microphone is still on.modelRoot.body.head.LowerMouth:setRot(ORIGINAL_MOUTH_ROTATION, 0, 0)end12 collapsed lineslocal function onMicrophone(event)local rawAudioLevel = voice:getLevel(event)-- Anything above 1.5 might be too intense, but adjust as needed.local smoothedAudioLevel = voice:smoothing(rawAudioLevel, 1.1)local clampedAudioLevel = math.clamp(ORIGINAL_MOUTH_ROTATION + -smoothedAudioLevel * 0.3,MAX_MOUTH_ROTATION, -- Lower bound (e.g., -45)ORIGINAL_MOUTH_ROTATION -- Upper bound (e.g., -17.5))modelRoot.body.head.LowerMouth:setRot(clampedAudioLevel, 0, 0)modelRoot.body.head.UpperMouth:setRot(-smoothedAudioLevel * 0.05, 0, 0)endif client:isModLoaded("figurasvc") thenevents.host_microphone = onMicrophoneevents.microphone = onMicrophonevoice:callOnActive(onMicrophoneOff)end -

There is only one more thing we need to add, and that is the fallback ping. This is the same as the one we used in the first method a long time ago, but it will only be called if the player doesn’t have FiguraSVC installed.

local modelRoot = models.model.rootlocal ORIGINAL_MOUTH_ROTATION = -17.5 -- The original rotation angle of the mouth partlocal MAX_MOUTH_ROTATION = -45 -- The maximum rotation angle of the mouth partfunction pings.on_talk(state)if not client:isModLoaded("figurasvc") thenanimations.model.talk:setPlaying(state)endend17 collapsed lineslocal function onMicrophoneOff(state)if state then return end -- Don't do anything if the microphone is still on.modelRoot.body.head.LowerMouth:setRot(ORIGINAL_MOUTH_ROTATION, 0, 0)endlocal function onMicrophone(event)local rawAudioLevel = voice:getLevel(event)-- Anything above 1.5 might be too intense, but adjust as needed.local smoothedAudioLevel = voice:smoothing(rawAudioLevel, 1.1)local clampedAudioLevel = math.clamp(ORIGINAL_MOUTH_ROTATION + -smoothedAudioLevel * 0.3,MAX_MOUTH_ROTATION, -- Lower bound (e.g., -45)ORIGINAL_MOUTH_ROTATION -- Upper bound (e.g., -17.5))modelRoot.body.head.LowerMouth:setRot(clampedAudioLevel, 0, 0)modelRoot.body.head.UpperMouth:setRot(-smoothedAudioLevel * 0.05, 0, 0)endif client:isModLoaded("figurasvc") thenevents.host_microphone = onMicrophoneevents.microphone = onMicrophonevoice:pingOnActive(pings.on_talk)voice:callOnActive(onMicrophoneOff)end -

🎉 And that is it! You now have a fully functional mouth movement system that uses the audio levels to drive the animation. This is a pretty good starting point, but you can always improve it by adding more features or tweaking the numbers to your liking.

Afterword

I hope that you found this guide at least somewhat helpful, I really don’t write documentation that often. If you have any questions, feel free to reach out to me on Discord at @knownsh. I am always happy to help out with any issues you might have. Although my replies might be slow, I will get back to you as soon as I can.

There is also a decicated thread on the Figura Discord for any questions or issues you might have. I am also open to suggestions for new features or improvements to the mod, so feel free to reach out if you have any ideas.